“Look, I need these employees to do their jobs. Right now, that isn’t happening. I don’t care about the passing score on the eLearning. What’s the point of testing them if they can get 100% and still have no idea what they’re supposed to do when they clock in?”

“Look, I need these employees to do their jobs. Right now, that isn’t happening. I don’t care about the passing score on the eLearning. What’s the point of testing them if they can get 100% and still have no idea what they’re supposed to do when they clock in?”

Harsh.

This frustration often comes out when people think job training works the same way as testing does in schools. But it doesn’t.

Why Traditional Testing Doesn’t Work at Work

You probably sat through a lot of testing when you were in school. Chapter quizzes, semester exams, and standardized tests all do the same thing: measure what students know. Since passing classes and graduating depended on test scores, it made sense for you to learn “how to test.” You went through the material that would be on the test, so you could pass the test. Simple as that. Since it’s what they’re familiar with, many people assume that workforce training can follow the same pattern and be effective.

But multiple-choice and true/false questions don’t appear in front of people during their workday. Subjects and topics don’t come up in clearly categorized situations. They’re dealing with real tasks and real problems. Everything is mixed together and interdependent. People don’t have a fixed set of options to choose from. They could literally do anything, even things that “don’t make sense.” And even if they know what to do, that can be different from knowing how or why to do it.

School is a sterile, simplified environment for testing knowledge. It doesn’t have the same messiness and urgency of real-world decisions or application. Those are two very different things. Yes, people generally need to know how to do something in order to do it. But your organization’s learning solutions will fall short if they stop at having learners “know” and never move on to “doing.” If there isn’t any application, there won’t be any transfer.

Common Workplace Testing Pitfalls

Testing shortly after content is presented

Example: If there’s a test at the end of an eLearning module or classroom training, you’re testing short term memory, not comprehension or application.

Treating testing as a one-time event

Example: A salesperson attends a seminar about their company’s new CRM system. The facilitator has them do a few basics tasks and signs off that they’ve passed. They’re never evaluated again. If you test a skill once that’s just a snapshot of that moment. It doesn’t show improvement or degradation over time.

Making tests easy so learners can pass on the first try

Making tests easy so learners can pass on the first try

Example: Which one of the following is unethical?

-

- –Admitting a mistake

- –Lying to your boss

- –Forgetting a meeting

- –Using vacation time

If questions don’t require the average person to think they’re probably not good questions. If everyone can pass on the first try they’re too easy to have any real value.

Favoring unrealistic testing methods

Example: Using a drag and drop to put steps in the correct order assumes that the learner can recall all the steps. They may not. And when do your employees work with drag and drops?

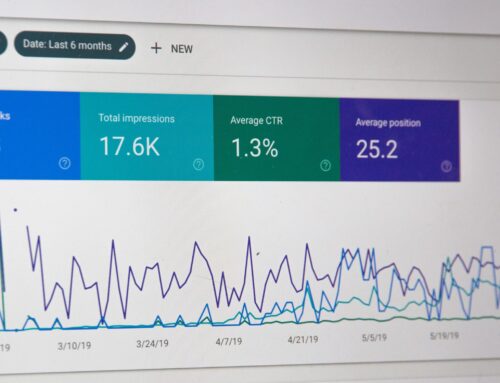

Focusing on completion rates

Example: 600 learners completed the mandatory compliance training. So, what? A completion only shows that someone took the time to do something. It doesn’t tell you whether they actually tried or just kept clicking until they reached the passing score.

Assuming that “knowing about” something is the same as being able to do it

Example: A new fry cook knows they need to clean the fryer at the end of the day. That’s not the same as knowing the steps for cleaning the fryer or where to find the needed cleaning supplies.

Testing That Does Work at Work

Practical application and spaced repetition are key. They increase skill and knowledge transfer from the learning environment to real life. Role-playing, software simulations, decision-making scenarios, and other interactive forms of assessment are also more memorable than traditional testing. Few learners are going to recall a run-of-the-mill “Which of the following…” test. An escape room that has them apply what they learned, for instance, is something they’ll be talking about for a while. As they remember it they’ll be remembering the content too. The point is that if a learner can actually do something, they’ve taken the next step beyond “just knowing.”

Here are some testing ideas that include application and spaced repetition:

- Regularly release new, realistic scenario activities on your LMS

- If learners work with a software system, periodically see if their performance data meets expectations (number of transactions processed per hour, help desk tickets closed, etc.)

- Send out reflection messages (digital or hard copy) a few days after a training event and ask learners to describe how they’ve used what they learned, or how they plan to use it

- Use adaptive and/or competency-based learning and track learners’ progress toward their goals

Don’t fall into the habit of testing what your learners know. See what they can do instead. Treat learning and evaluation as ongoing processes, rather than one-time events. There’s a very good chance that your learners will perform better, and your bottom line will improve.

For more industry-focused articles like this, subscribe to our free monthly newsletter, Smarter…Faster!